I’m already quite sceptical of AI image generation. I’m cautious of the way it seems to flippantly skirt around (or entirely ignore) copyright, and I worry about the negative feedback loop of replacing artists from making art. Caution is important to practice, especially now that red_panda, a brand-new image-generation tool, has just been spotted on a site dedicated to voting for the best AI and rocked straight up to the top of the leaderboards.

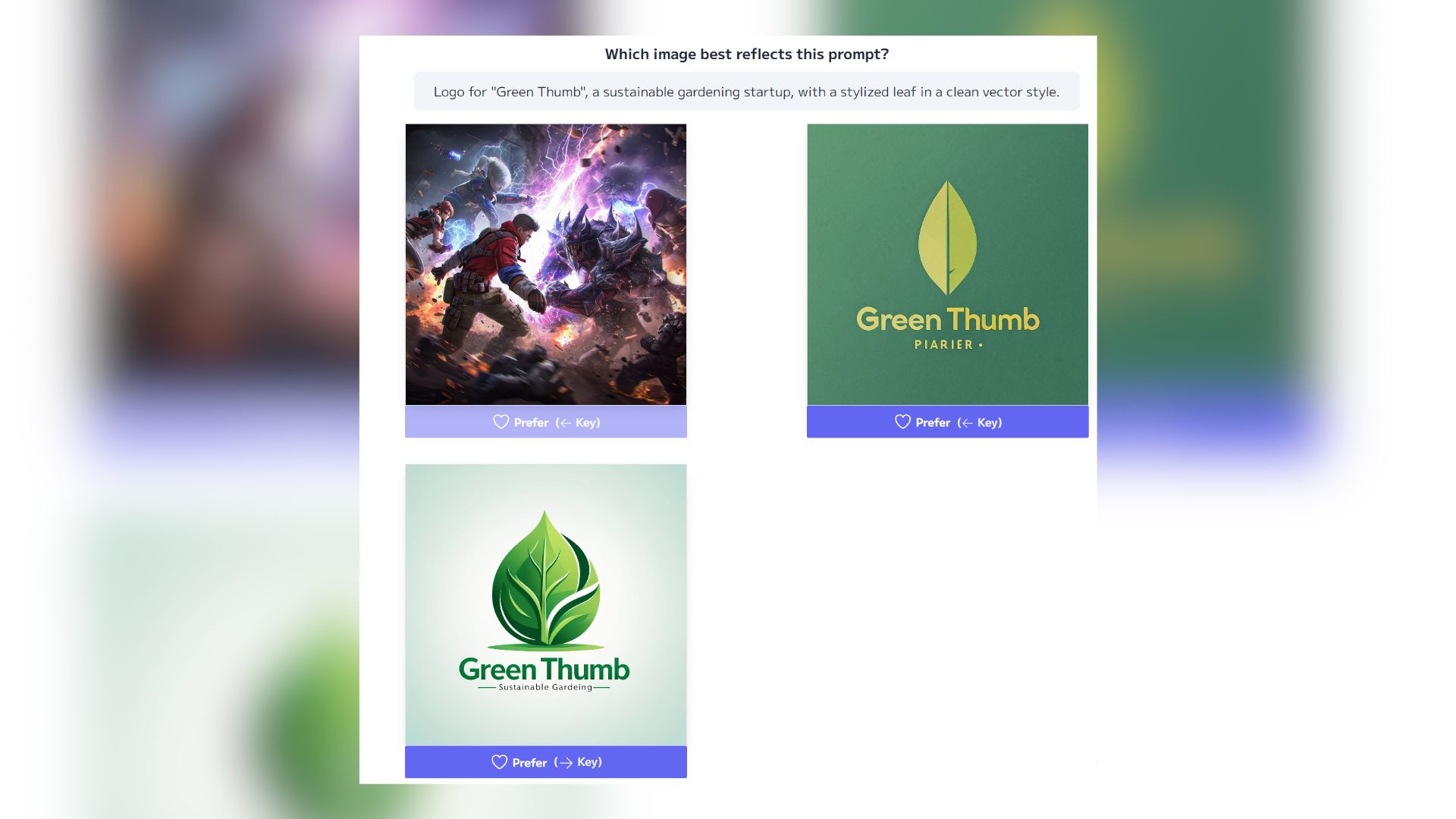

As reported by TechCrunch, a site called Artificial Analysis has a section called “Arena” that allows users to vote between two images on which fits a given prompt best—the two images being generated by different AI image generation models.

In the leaderboard, red_panda sits at the very top, with a 72% win rate. This means that in all the battles its images have found themself, it has only lost 28% of them.

Some in the comments of Artificial Analysis’ post claim this could be a new version of Midjourney or Baidu’s AI tool, and this could certainly be the case given the creator’s name is obscured, but only time will tell what exactly red_panda is. This new tool has the highest ELO (generally perceived as a metric of quality) in the data pool and a fast generation time at seven seconds, but no price per image, as it’s all fairly unknown.

The win rate is and impressive figure, and that is why it was noted by the Artificial Analysis X account. At the time, it had an even more impressive track record, at a 79% win rate.

The red panda name does perhaps feel in poor taste with the energy costs of AI models like this, given it is an endangered animal partially down to deforestation and climate change. The images given in the battle aren’t created in the moment, based on generation time, as some models can take up to a minute to generate an image. If you aren’t a fan of AI image generation, playing this game doesn’t seem to directly contribute to it in a meaningful way.

I tried voting in the “Arena” myself and I came away quite surprised by my own personal leaderboards. Within 60 choices, red_panda had only come up two times and sat at the very top of my leaderboard, with a 100% win rate.

By 150, that win rate dropped all the way down to 61% for me with 11 total selections. Two different versions of the FLUX model hogged the top of my personal leaderboard. These same two models sit at two and three in the global leaderboard.

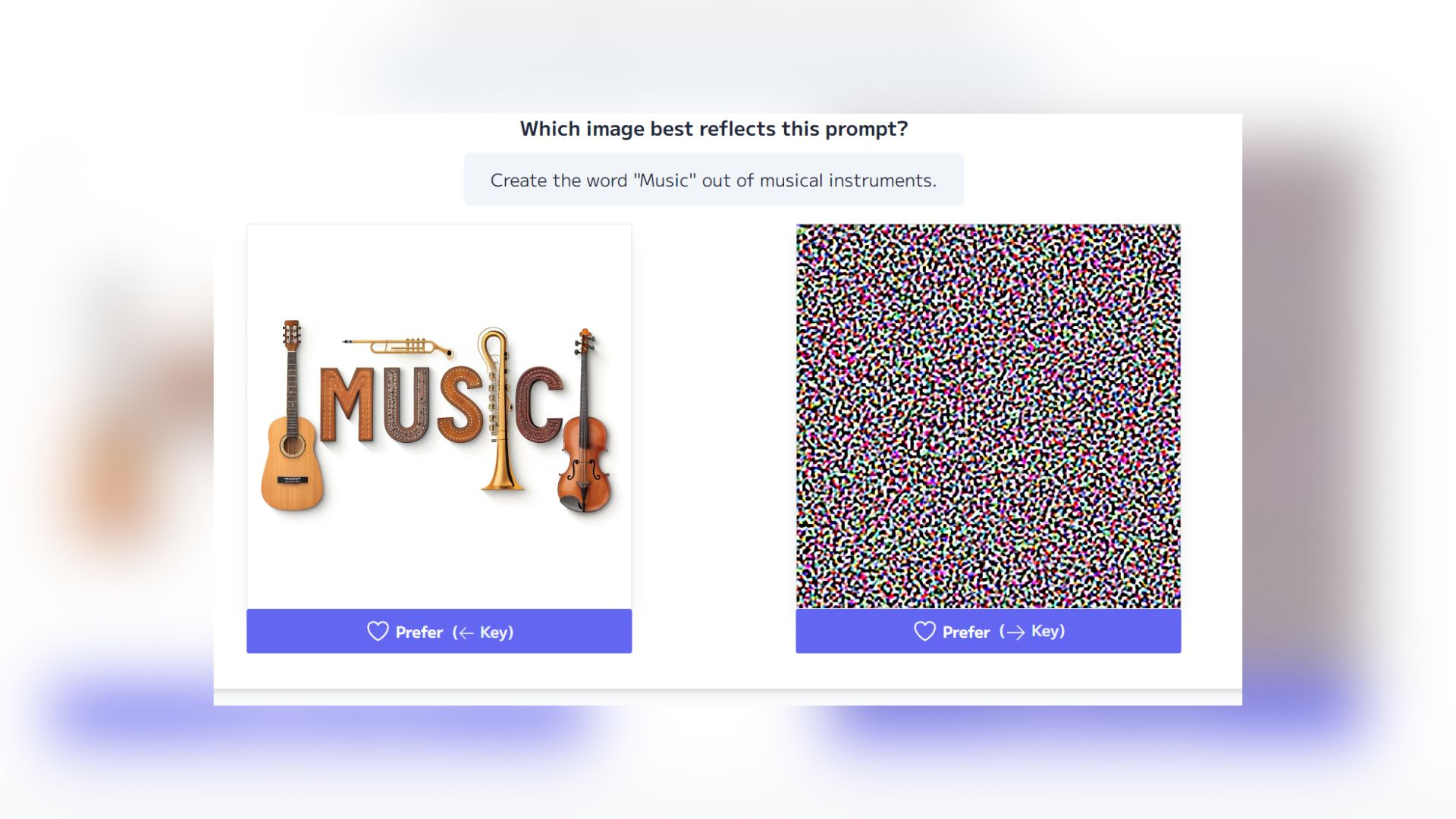

The AI model red_panda mostly took those wins early on from me because it’s one of a handful of models that can actually handle text with any competency. It is also pretty good at removing that ill-proportioned glossiness that many AI images tend to have. The site itself regularly ran into problems, once running the same image from the same model against itself. Then, one time, I was given three images (one of which was the wrong prompt and couldn’t be clicked) and the other two had all of their text wrong. Another win red_panda managed to win was against an image of colourful static, where the model or site simply failed to display correctly.

It’s also worth noting I ran into duplicate images in that time so these figures could technically be weighted, if a company wanted that to happen.

Though this image generation tool does seem pretty impressive, based on selection figures, it’s important to note that much of its competition is pretty poor, its win rate has gone down with time, and it has been selected a total of just under 15,000 times in battles.

We don’t yet know what it is, how it works, or where it scrapes the imagery it is trained on. From my brief time testing it, the model seems to be on the higher end of AI imagery generation tools but I still spotted some problems in the arena.